Oct 25 2023

Aug 15 2023

When discussing Kafka at the Edge, one major question should always be asked.

Can and should I deploy Apache Kafka at the edge? It is an important question to ask in nearly all industries implementing Kafka, including manufacturing, automation industry, aviation, logistics, and retailing. Kafka at the Edge first requires some background knowledge on Kafka & the Edge. We assume you are familiar with basic Docker knowledge as well as foundational Distributed Computing principles.

Before we get too deep into the details of Kafka at the Edge, lets quickly review some information about Kafka.

Kafka is a streaming platform that has three key capabilities:

Kafka is generally used for two broad classes of applications:

To understand how Kafka does these things, let’s dive in and explore Kafka’s capabilities from the bottom up.

Foundational Concepts to remember:

Kafka has four core APIs:

Multi-cluster and cross-data center deployments of Apache Kafka have become the norm rather than an exception. A Kafka deployment at the edge can be an independent project. However, in most cases, Kafka at the edge is part of an overall Kafka architecture. For this tutorial we will set up both a producer and consumer at the edge, but bear in mind that this is NOT a traditional or best-practice Kafka architecture.

Many reasons exist to create more than just one Kafka cluster in your organization:

When you want to deploy Kafka at the Edge, there are a few different paradigms that exist.

In order to be able to follow along with this tutorial, you need to have at least the following:

( Tip: you can run the following command to check if Docker is installed and running properly )

docker run hello-world If you see an error, you might have missed a step. Otherwise, this should mean you have Docker running on your machine and can continue to the next step.

To get started, we need to make sure we have the following information:

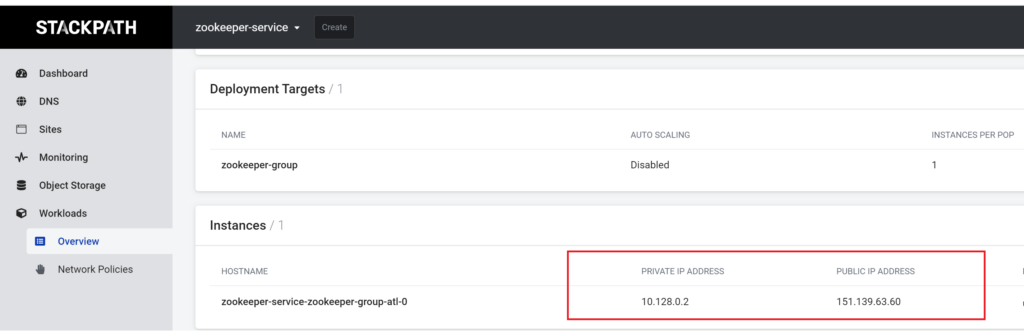

If you have Zookeeper up and running on StackPath, then you will be able to retrieve your zookeeper private I.P. address from the Workload page.

In order to test kafka at the edge, we will utilize a kafka docker image as our parent image, and then we will create a bash script that we will utilize to setup kafka, start kafka, and eventually consume & produce data streams. We will be creating one container and this single container will be capable of both producing and consuming data streams.

How to complete this step:

First we will run the following command in your terminal.

mkdir kafka-at-the-edgeThen cd into the directory like so:

cd kafka-at-the-edgeRun the following to create a new empty Dockerfile

touch DockerfileEdit the Dockerfile. We will be using nano, however you can use the editor of your choice.

nano DockerfilePaste the following contents.

FROM docker.io/bitnami/kafka:2

COPY scripts/run.sh /opt/bitnami/scripts/kafka/Info

The FROM command selects our parent image and tells Docker to use docker.io/bitnami/kafka:2

The COPY command overwrites a file called run.sh with our own version. ( We will create this shortly )

Create the a scripts directory with the following command:

mkdir scriptsThen create the run.sh script we mentioned earlier.

touch ./scripts/run.shFinally, lets write our run script. We have written a run script and provided it for you below. Note that this can, and should be dramatically different than something running in production as it is written purely for educational purposes.

#!/bin/bash

# shellcheck disable=SC1091

set -o errexit

set -o nounset

set -o pipefail

# set -o xtrace # Uncomment this line for debugging purpose

# Load libraries

. /opt/bitnami/scripts/libkafka.sh

. /opt/bitnami/scripts/libos.sh

##### Constants

BOOTSTRAP_SERVER=localhost:9092

REPLICATION_FACTOR=1

PARTITIONS=1

STARTED_SIGNAL="started (kafka.server.KafkaServer)"

# Load Kafka environment variables

eval "$(kafka_env)"

if [[ "${KAFKA_CFG_LISTENERS:-}" =~ SASL ]] || [[ "${KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP:-}" =~ SASL ]]; then

export KAFKA_OPTS="-Djava.security.auth.login.config=${KAFKA_CONF_DIR}/kafka_jaas.conf"

fi

flags=("$KAFKA_CONF_FILE")

[[ -z "${KAFKA_EXTRA_FLAGS:-}" ]] || flags=("${flags[@]}" "${KAFKA_EXTRA_FLAGS[@]}")

START_COMMAND=("$KAFKA_HOME/bin/kafka-server-start.sh" "${flags[@]}")

info "** Starting Kafka **"

if am_i_root; then

grep -q "$STARTED_SIGNAL" <(exec gosu "$KAFKA_DAEMON_USER" "${START_COMMAND[@]}")

else

grep -q "$STARTED_SIGNAL" <(exec "${START_COMMAND[@]}")

fi

info "** CHECKING TOPIC **"

if [ "$CREATE_TOPIC" = "yes" ]

then

./opt/bitnami/kafka/bin/kafka-topics.sh --create

--bootstrap-server $BOOTSTRAP_SERVER

--replication-factor $REPLICATION_FACTOR

--partitions $PARTITIONS

--topic $TOPIC || true

fi

info "** CHECKING ROLE **"

if [ $KAFKA_ROLE = "CONSUME" ]

then

info "** Starting Kafka Console Consumer **"

./opt/bitnami/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic $TOPIC --from-beginning

else

info "** Starting Kafka Console Producer **"

echo "THIS IS A TEST MESSAGE FOR THE TOPIC - $TOPIC" | ./opt/bitnami/kafka/bin/kafka-console-producer.sh --broker-list localhost:9092 --topic $TOPIC

fiNow that we have a run script, lets go ahead and build the docker container with the following command. Please note that you must put your image name and tag in the appropriate place.

docker build . -t ![]() :

: Here is an example:

chrisaltamimi-epsilonasi@ubuntu:~/workspace/kafka/ $ docker build . -t epsilonasi/kafka:3

Sending build context to Docker daemon 5.12kB

Step 1/2 : FROM docker.io/bitnami/kafka:2

---> d7dace0ab82e

Step 2/2 : COPY scripts/run.sh /opt/bitnami/scripts/kafka/

---> Using cache

---> 845c9382af6e

Successfully built 845c9382af6e

Successfully tagged epsilonasi/kafka:3Once that is built. Go ahead and push it to your respective registry. In this tutorial we will be pushing it to Dockerhub.

chrisaltamimi-epsilonasi@ubuntu:~/workspace/kafka/ $ docker push epsilonasi/kafka:3**Well done! You have created a kafka container and pushed it to a container registry. We will now deploy it to StackPath!

Getting started is easy! In order to run Kafka on StackPath we simply do the following:

Lets go ahead and get started. If you are this far down in the guide, we assume you have met the requirements and completed the “getting prepared” sections above.

Info:

In this guide we will deploy TWO workloads. One will be our consumer and one will be our producer.

How to complete this step

Go ahead and name your workload. Then we need to select our workload type and our Image.

epsilonasi/kafka:3

This will be the workload we use to consume messages. In this example exercise, the kafka-producer will simply send a test message.

Click Continue

Info:

There are a few environment variables we will need to deploy Kafka properly.

KAFKA_CFG_ZOOKEEPER_CONNECT . Set this to your Zookeeper IP address and port number. If you need help locating this, see the information above titled Retrieving the Zookeeper IP address and port number.ALLOW_PLAINTEXT_LISTENER. Set this to yes . This is a setting that will allow us to easily set up and test our Kafka instances on the edge.CREATE_TOPIC. For this first workload, we will tell Kafka that we want to create the topic we choose. So set this to yesKAFKA_ROLE. Since we want this container to be a producer, we will tell Kafka that by setting this to CONSUMETOPIC. This is the topic that we expect this Kafka container to listen to. For this guide, set it to testTopicHow to complete this step:

First you need to define the environment variables as shown above.

Second, you need to set the public ports if you are using a Kafka instance that is outside the private network available to your Kafka instance.

We do not need to set any commands, and thus your workload settings should appear as such.

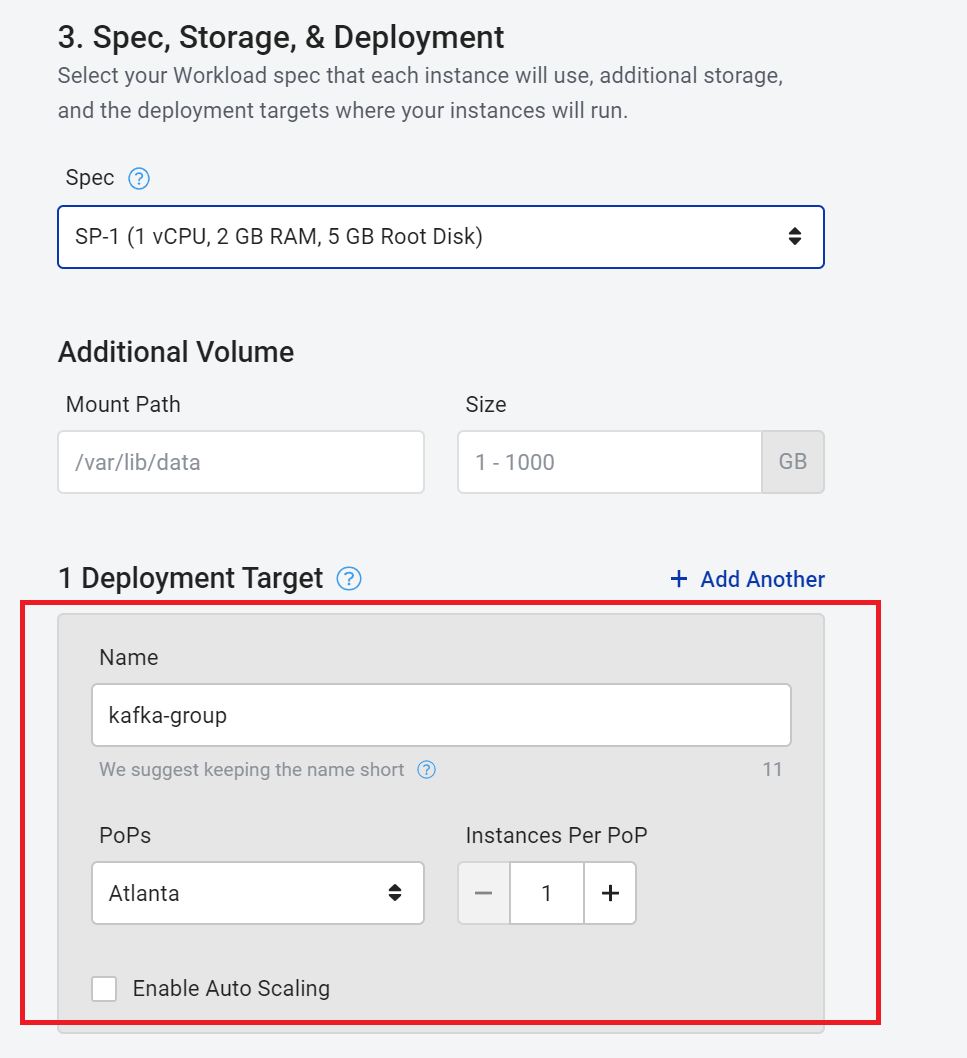

How to complete this step:

After you are done , go ahead and save the workload to proceed

How to complete this step

Go ahead and name your workload. Then we need to select our workload type and our Image.

epsilonasi/kafka:3

This will be the workload we use to produce messages for consumption. In this example exercise, the kafka-producer will simply send a test message.

Click Continue

Info:

There are a few environment variables we will need to deploy Kafka properly.

KAFKA_CFG_ZOOKEEPER_CONNECT . Set this to your Zookeeper IP address and port number. If you need help locating this, see the information above titled Retrieving the Zookeeper IP address and port number.ALLOW_PLAINTEXT_LISTENER. Set this to yes . This is a setting that will allow us to easily set up and test our Kafka instances on the edge.CREATE_TOPIC. We had our consume create our topic already. So we KAFKA_ROLE. Since we want this container to be a producer, we will tell Kafka that by setting this to PRODUCETOPIC. This is the topic that we expect this Kafka container to listen to. For this guide, set it to testTopicHow to complete this step:

First you need to define the environment variables as shown above.

Second, you need to set the public ports if you are using a Kafka instance that is outside the private network available to your Kafka instance.

We do not need to set any commands, and thus your workload settings should appear as such.

How to complete this step:

That is it, you are ready to send messages between the two kafka instances. Our container was set up to send a single test message from your Producer to your Consumer So check the container logs to see this happen!

First, check the producer logs. They should look something like this:

[0m [38;5;2mINFO [0m ==> ** Starting Kafka setup **

[0m [38;5;3mWARN [0m ==> You set the environment variable ALLOW_PLAINTEXT_LISTENER=yes. For safety reasons, do not use this flag in a production environment.

[0m [38;5;2mINFO [0m ==> Initializing Kafka...

[0m [38;5;2mINFO [0m ==> No injected configuration files found, creating default config files

[0m [38;5;2mINFO [0m ==> ** Kafka setup finished! **

[0m [38;5;2mINFO [0m ==> ** Starting Kafka **

[0m [38;5;2mINFO [0m ==> ** CHECKING TOPIC **

[0m [38;5;2mINFO [0m ==> ** CHECKING ROLE **

[0m [38;5;2mINFO [0m ==> ** Starting Kafka Console Producer **Here we see that our kafka container has successfully started, verified that it is a Producer, and started producing messages.

In order to see whether it was sucessfully sent, lets take a look at our consumer logs.

They should look a little something like this:

...

[0m [38;5;2mINFO [0m ==> ** Kafka setup finished! **

[0m [38;5;2mINFO [0m ==> ** Starting Kafka **

[0m [38;5;2mINFO [0m ==> ** CHECKING TOPIC **

[0m [38;5;2mINFO [0m ==> ** CHECKING ROLE **

[0m [38;5;2mINFO [0m ==> ** Starting Kafka Console Consumer **

THIS IS A TEST MESSAGE FOR THE TOPIC - testTopicThats it! You did it!

In this tutorial we learned a few things. Firstly, Kafka is a streaming platform that has three key capabilities:

And Secondly, Kafka is generally used for two broad classes of applications:

But more importantly, we spent some time deploying Kafka on StackPath and even tested out sending a message from one instance to be consumed by the other! We were able to discuss when and why you would want to deploy Kafka at the edge, and we even showed you how to do it.

For more, we encourage you to visit other StackPath tutorials.